Identifying fake AI celebrities in endorsements

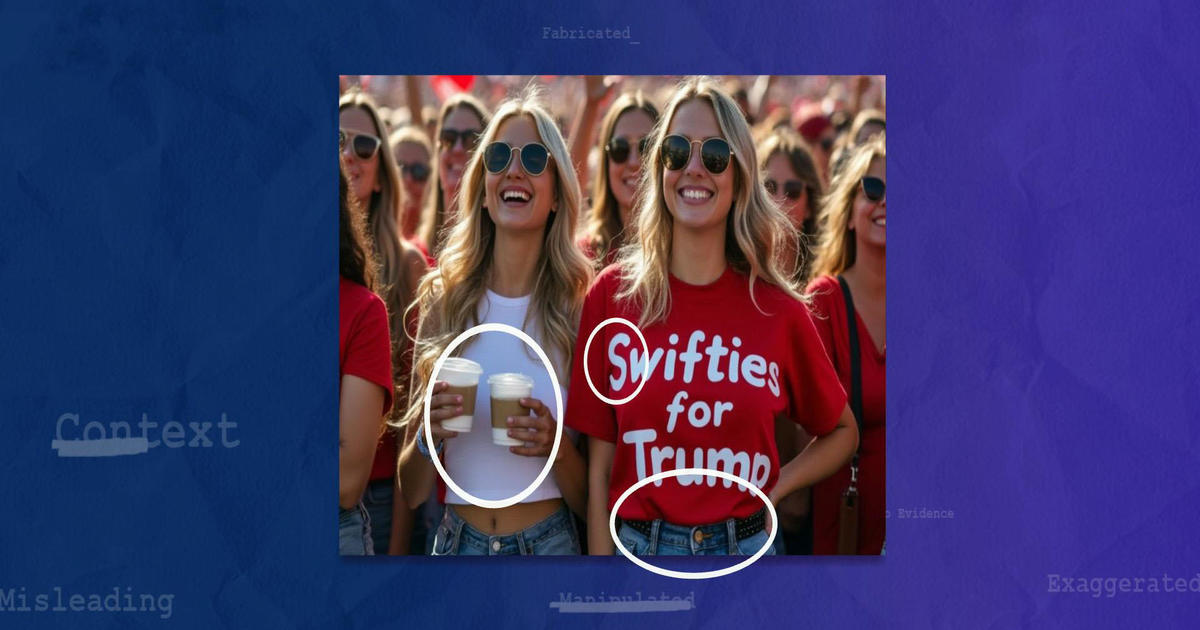

Recently, there has been a surge in AI-generated images and videos circulating on social media, creating fake endorsements of political candidates by celebrities like Elton John, Will Smith, and Taylor Swift. These fabricated media pieces aim to deceive viewers into thinking that these influential figures support specific political agendas, such as endorsing former President Donald Trump.

One notable incident involved a photo of Elton John wearing a pink coat with “MAGA” letters on it, implying his support for Trump. However, the image was entirely fake, generated through artificial intelligence. Similar instances have occurred with other celebrities, including Will Smith and Taylor Swift, where their likenesses were used to falsely claim endorsements of political figures like Trump.

When Taylor Swift endorsed Vice President Kamala Harris on Instagram, she mentioned the AI-generated images that falsely portrayed her as supporting Trump. Swift emphasized the importance of transparency and clarifying her actual stance on the election, calling attention to the need for authenticity in political endorsements from public figures.

Another instance involved an AI-generated video of Will Smith and Chris Rock dining with Trump, garnering over 700,000 views. These misleading media pieces highlight the growing concern over the spread of manipulated content and the potential impact on public perception.

Identifying AI-Generated Content

According to Claire Leibowicz, head of the AI and Media Integrity Program at The Partnership on AI, there are several ways to identify AI-generated images and videos.

- Look for signs of airbrushing or manipulation that defy natural laws.

- Check for visual inconsistencies, such as unusual placements of objects or features within the image.

- Utilize reverse image searches through tools like Google Lens to locate the original source of the content.

Leibowicz shared a personal experience where she was deceived by an AI-generated image of Pope Francis, emphasizing the need for experts and journalists to assist in authenticating digital content. The prevalence of such deceptive media raises concerns about the potential impact on public discourse and the upcoming elections.

A study conducted by the Polarization Research Lab revealed that a significant portion of Americans express apprehension about the impact of AI on elections, with nearly half believing it will worsen the electoral process. This sentiment underscores the importance of combatting misinformation and safeguarding the integrity of democratic processes.

Sam Gregory, executive director of Witness.org, highlighted the role of powerful leaders in disseminating misleading information during elections. With the Department of Homeland Security issuing warnings about the challenges posed by AI-generated media, it becomes essential to address the potential risks associated with the proliferation of fake content online.

In the midst of evolving technological landscapes and increasing concerns about misinformation, efforts to educate the public, verify digital content, and promote transparency become paramount. As the November presidential election approaches, vigilant scrutiny of online media and proactive measures to counter false narratives are crucial in safeguarding the democratic process.

As the prevalence of AI-generated content continues to rise, fostering a culture of media literacy and critical thinking becomes imperative in combatting misinformation and preserving the integrity of public discourse.